AIResearch

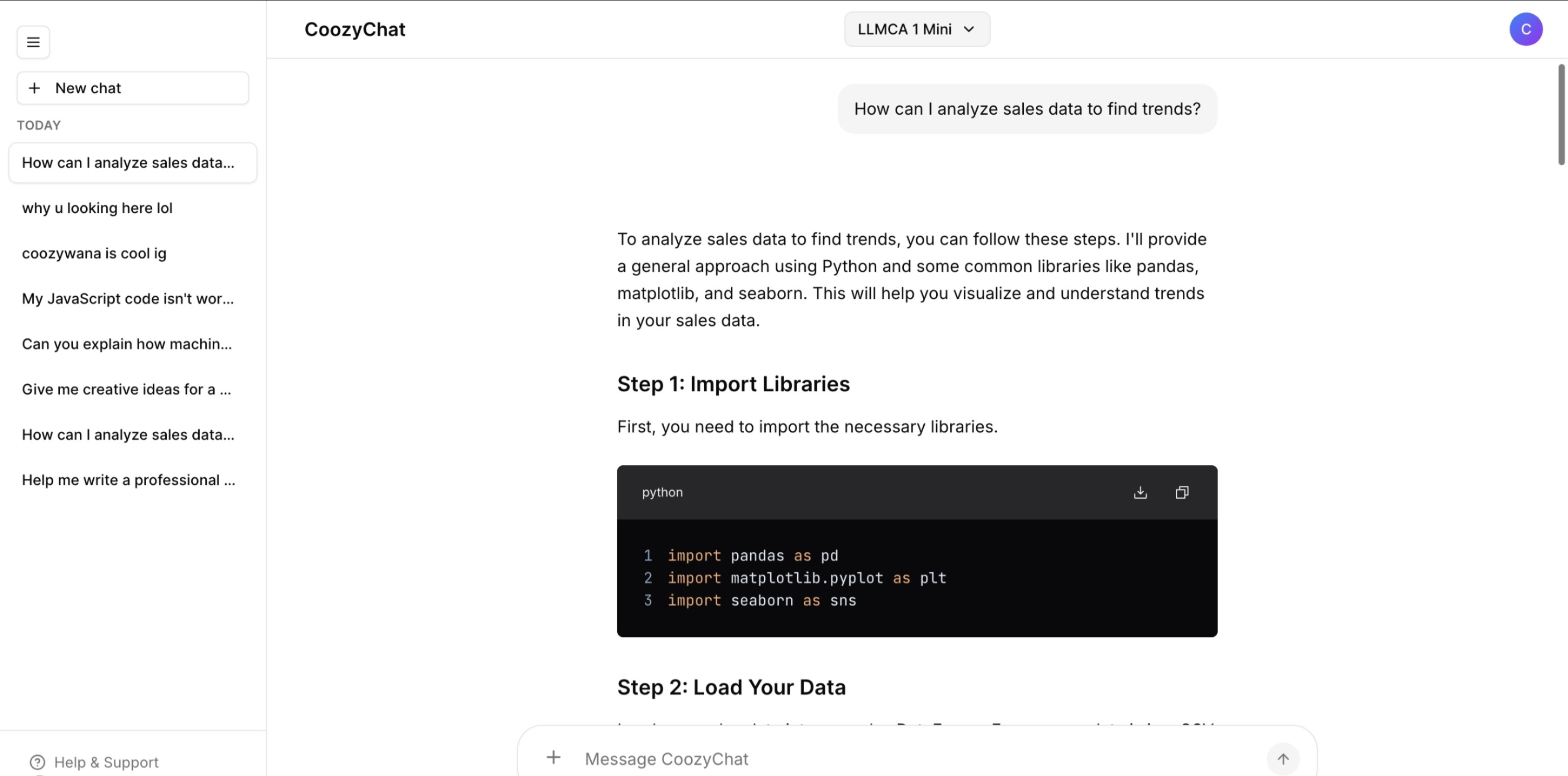

Update on LLMCA-1-1B Training

July 4, 2025·1 min read

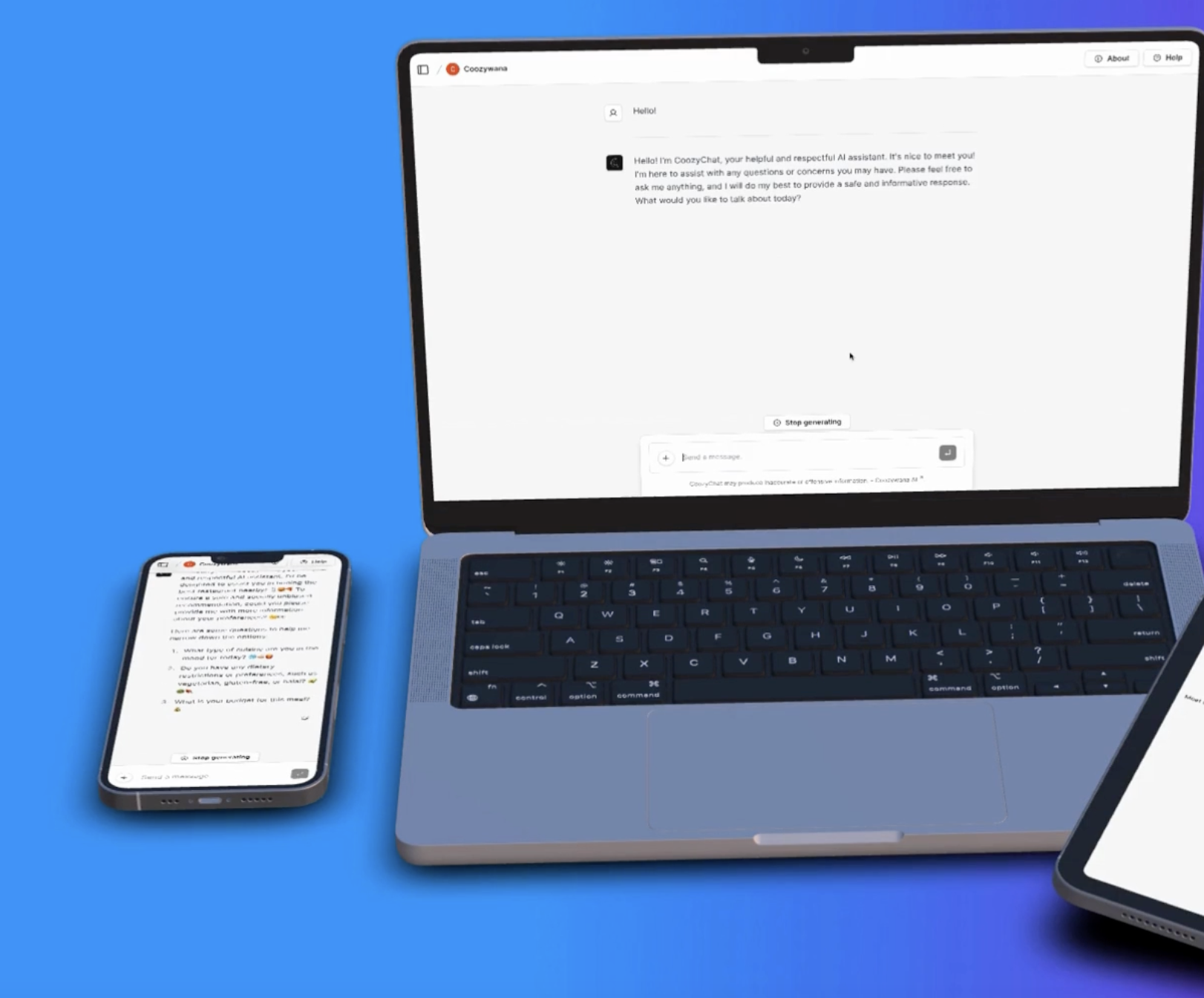

Following the start of training our LLMCA-1-1B model earlier this year, we have progressed through multiple epochs on a custom-curated dataset spanning diverse domains.

Due to hardware constraints limiting the context window size and other challenges encountered, the model’s outputs sometimes exhibited incoherence, repetition, and hallucinations.

While the results weren’t as strong as hoped, this project has provided valuable insights for future development efforts.

We currently do not have plans for further updates on this model.